The Shocking Truth: GPT Detectors Are Unfair to Non-Native English Writers | 7 Critical Findings

🚫 The Shocking Truth: GPT Detectors Are Unfair to Non-Native English Writers | 7 Critical Findings

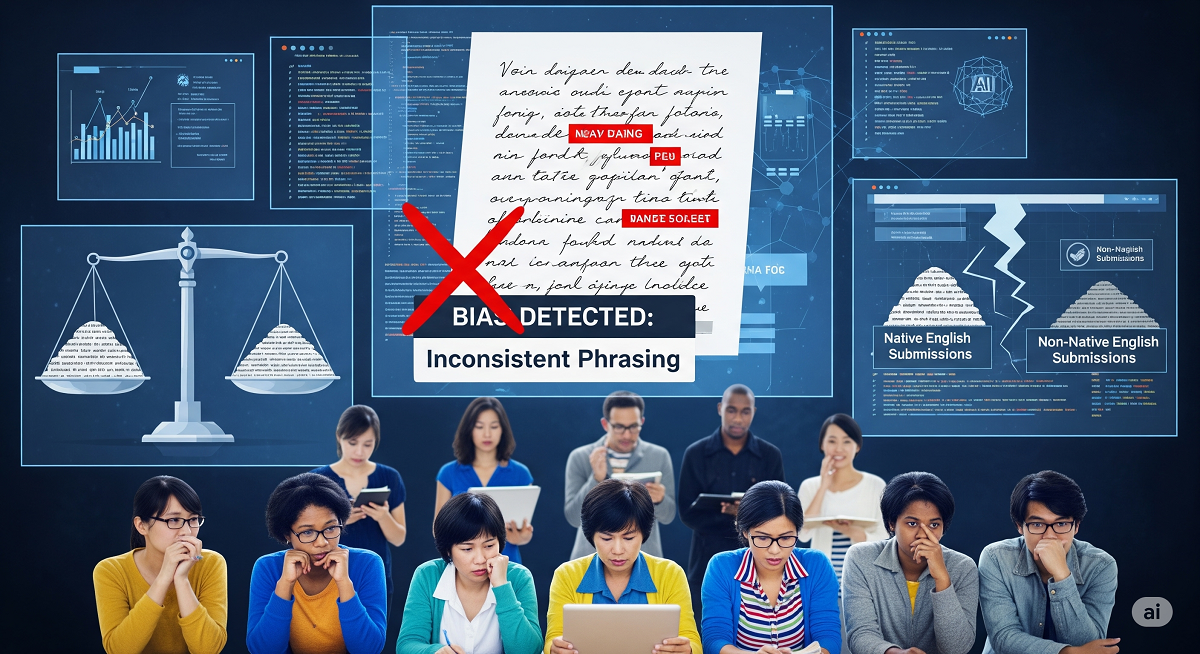

AI tools are evolving fast—but some of the systems meant to safeguard against misuse may be unintentionally harming the very people they’re supposed to protect. A 2023 study published in Patterns and preprinted on arXiv by researchers Liang, Yuksekgonul, Mao, Wu, and Zou has uncovered disturbing biases in popular GPT detection systems. Here’s why it matters.

🔍 High False Positives for Non-Native Writers

One of the most alarming findings is that over 60% of TOEFL essays written by non-native English speakers were wrongly flagged as AI-generated. In contrast, essays by U.S. eighth graders were almost always correctly identified as human-written.

Why it’s a problem:

This creates a dangerous precedent where students and professionals who write clearly and simply are punished by faulty AI detectors—undermining trust and inclusion.

🧠 The Flawed Reliance on “Perplexity”

Most AI detectors use a metric called perplexity—which measures how predictable a text is. Non-native writers often use simpler vocabulary and grammar, which makes their writing more "predictable." Ironically, that makes it seem more like it was written by AI.

Bottom line:

Simplified language ≠ AI writing. But detectors can’t tell the difference.

🛠️ Editing Can Dramatically Reduce Detection Bias

When the researchers enhanced TOEFL essays using ChatGPT to expand vocabulary and add literary variation, false-positive rates dropped by nearly 50%—from ~61% to just 12%.

✅ This suggests GPT detectors judge based on language complexity, not authenticity.

🤖 AI-Generated Text Can Evade Detection

Even more concerning, when ChatGPT-generated text was rewritten with richer vocabulary, it evaded detection almost entirely.

So, while real student work gets flagged, actual AI writing can slip through with minor edits. This undermines the very purpose of these tools.

⚠️ Discriminatory Risk for Non-Native and Marginalized Writers

False accusations of AI use can severely impact:

-

Academic performance

-

Job applications

-

Professional credibility

-

Mental health and confidence

🛑 Detection tools must not become digital gatekeepers that amplify inequality.

🎭 Detection Systems Are Easily Manipulated

By simply prompting ChatGPT to “elevate the language” of its output, researchers reduced detection rates to near zero.

This highlights a major flaw: bad actors can game the system, while honest writers bear the consequences.

📣 Urgent Call for Fairer, Smarter Detection

The authors recommend:

-

Avoiding AI detectors in high-stakes settings like education or hiring

-

Creating fairer algorithms that consider linguistic diversity

-

Evaluating tools on varied, global writing samples

-

Recognizing the limits of current detection methods

🧭 Final Thought: Smarter AI Needs Smarter Ethics

This isn’t just a tech issue—it’s a human one. GPT detectors should protect integrity, not penalize non-native writers or low-resource communities. As AI grows in power, fairness must be a top priority.

Before using detection tools in educational or evaluative settings, ask: Are we punishing clarity? Are we encouraging conformity over creativity?

Let’s build tools that respect diversity, not undermine it.

📎 Study Reference: GPT Detectors Are Biased Against Non-Native English Writers – Liang et al., Patterns, July 2023 (arXiv link)

About the Author

Ahmed Elmalla is a senior ICT and Computer Science teacher at Sky International School in Jalal-Abad, Kyrgyzstan, with more than 19 years of international teaching and software development experience. He is recognized for delivering the Cambridge curriculum (IGCSE & A-Level Computer Science) as well as AP Computer Science A with a focus on deep conceptual understanding and exam readiness.

As a Cambridge ICT teacher in Kyrgyzstan, Ahmed designs structured lessons that combine theory, hands-on coding, and real-world applications. He teaches Python, Java, SQL, and algorithmic thinking while promoting higher-order cognitive skills and independent problem-solving.

His expertise extends to supporting slow learners and students with learning difficulties, using neuroscience-informed strategies and differentiated instruction techniques. This balanced approach has helped students significantly improve their academic confidence and performance.

Currently based in Central Asia, Ahmed also works with international students through online tutoring, serving learners from Malaysia, the United States, Germany, and beyond.

He remains committed to advancing digital education and empowering the next generation of programmers and innovators.