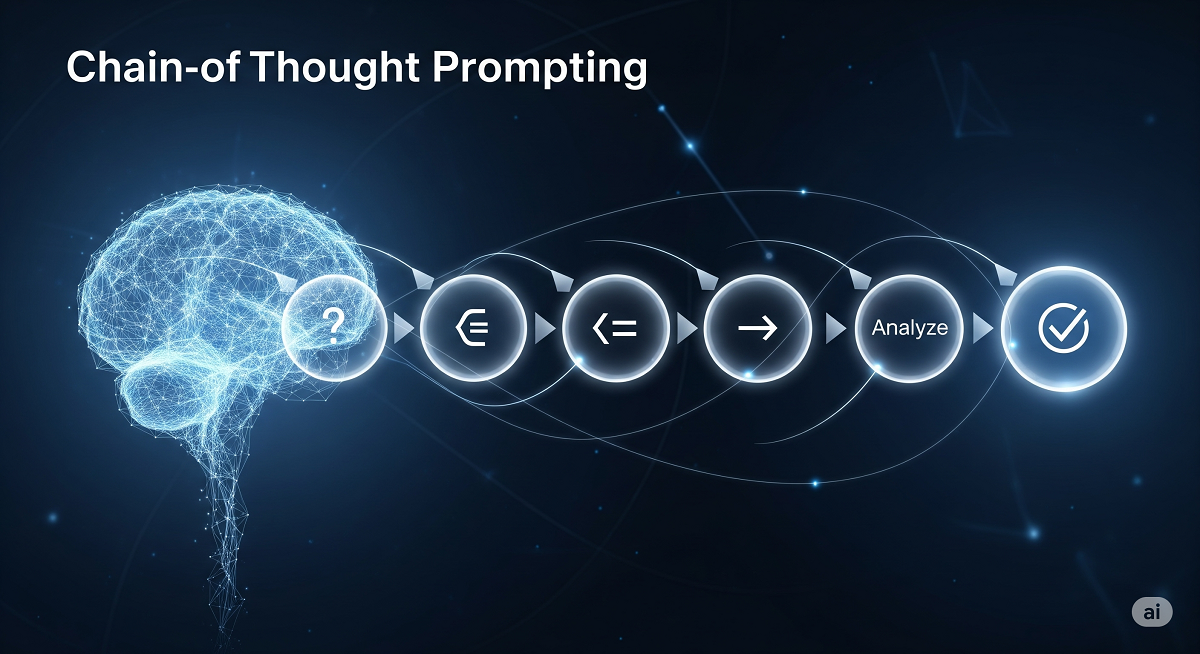

Unlocking AI Reasoning: How Chain-of-Thought Prompting Enhances Problem-Solving

Introduction

Large language models (LLMs) like GPT-3 and PaLM have revolutionized AI with their ability to generate human-like text. However, they often struggle with complex reasoning tasks that require multi-step logic—such as math word problems, commonsense reasoning, or symbolic operations.

A breakthrough technique called Chain-of-Thought (CoT) prompting solves this by enabling AI models to "think step-by-step" before answering, much like humans do. In this blog post, we’ll explore:

-

What Chain-of-Thought prompting is and how it works.

-

Why it significantly improves AI reasoning in arithmetic, commonsense, and symbolic tasks.

-

Practical examples of how to use CoT in prompts.

-

The future implications and limitations of this approach.

By the end, you’ll understand how to leverage CoT prompting to enhance AI performance in reasoning-heavy applications.

What is Chain-of-Thought Prompting?

Chain-of-Thought (CoT) prompting is a method where an AI model generates intermediate reasoning steps before arriving at a final answer. Instead of directly outputting a response, the model breaks down the problem into logical sub-steps—similar to how a student shows their work when solving a math problem.

Example: Standard Prompting vs. CoT Prompting

Standard Prompt:

text

Q: Roger has 5 tennis balls. He buys 2 more cans with 3 balls each. How many does he have now? A: The answer is 11.

CoT Prompt:

text

Q: Roger has 5 tennis balls. He buys 2 more cans with 3 balls each. How many does he have now? A: Roger started with 5 balls. 2 cans × 3 balls = 6 new balls. 5 + 6 = 11. The answer is 11.

Why Does This Work?

-

Decomposes Complexity: Multi-step problems become manageable.

-

Improves Accuracy: Models are less likely to make calculation errors.

-

Enhances Interpretability: Users can follow the AI’s reasoning process.

Key Findings from the Research

The paper "Chain-of-Thought Prompting Elicits Reasoning in Large Language Models" (Wei et al., 2023) reveals:

1. CoT is an Emergent Ability of Large Models

-

Only models with 100B+ parameters (e.g., PaLM 540B, GPT-3 175B) show significant improvements.

-

Smaller models generate illogical reasoning steps, leading to worse performance.

2. Boosts Performance Across Multiple Tasks

| Task | Standard Prompting | CoT Prompting | Improvement |

|---|---|---|---|

| Math (GSM8K) | 17.9% | 56.9% | +39% |

| Commonsense (StrategyQA) | 68.6% | 77.8% | +9.2% |

| Symbolic (Coin Flip) | 50% | 99.6% | +49.6% |

3. Outperforms Competing Methods

-

Better than Equation-Only Prompts: Natural language reasoning beats raw equations.

-

More Robust than Post-Hoc Explanations: Reasoning before the answer is crucial.

Practical Applications of CoT Prompting

1. Solving Math Word Problems

Prompt:

Q: A pizza costs $12. If I buy 3, how much do I spend? A: Each pizza costs $12. 3 pizzas × $12 = $36. The answer is $36.

Use Case: Educational AI tutors that explain solutions step-by-step.

2. Commonsense Question Answering

Prompt:

Q: Can a dog fly? A: Dogs lack wings and cannot generate lift. Therefore, they cannot fly. The answer is no.

Use Case: AI assistants providing logical justifications for answers.

3. Robot Task Planning

Prompt:

Human: "Bring me a non-fruit snack." Explanation: Find an energy bar (not a fruit). Plan: find(bar), pick(bar), deliver(bar).

Use Case: Robotics and automated workflow systems.

4. Date & Time Reasoning

Prompt:

Q: If today is 06/02/1943, what was the date 10 days ago? A: 10 days before 06/02 is 05/23. The answer is 05/23/1943.

Use Case: Scheduling assistants and calendar automation.

Limitations & Future Directions

While CoT prompting is powerful, it has some challenges:

-

Requires Large Models: Only effective with 100B+ parameter models.

-

Not Always Correct: Errors in intermediate steps lead to wrong answers.

-

Prompt Sensitivity: Performance varies based on how reasoning steps are phrased.

Future improvements may include:

-

Self-Verification: Models cross-checking their reasoning.

-

Hybrid Approaches: Combining CoT with retrieval-augmented generation.

Original Prompt Given to ChatGPT

*"Create a course outline for a 9-year-old IGCSE Computer Science class. Draft a basic outline of the key topics and learning goals, refine the outline by detailing each topic, and produce the complete version with a timeline and assignments."*

Why This Prompt Works

-

Audience-Specific:

-

Explicitly states the age group (9-year-olds) and curriculum (IGCSE), ensuring age-appropriate content.

-

Avoids advanced jargon (e.g., "functions" or "syntax") in favor of concrete activities.

-

-

Structured Output Request:

-

Asks for three progressive versions:

-

Basic outline (key topics/goals).

-

Refined outline (detailed subtopics + activities).

-

Complete version (timeline + assignments).

-

-

Mirrors the CoT approach by decomposing the task into logical steps.

-

-

Implied CoT Techniques:

-

Step-by-Step Progression: The prompt naturally guides the AI to:

-

Identify core concepts first (What is a computer?).

-

Break them into subtopics (Hardware vs. software).

-

Add interactive elements (Scratch projects).

-

-

Scaffolded Learning: Requests a timeline to ensure concepts build on each other (e.g., algorithms → flowcharts → coding).

-

Key Prompt Design Choices

| Element | Purpose | CoT Alignment |

|---|---|---|

| "For 9-year-olds" | Ensures simplicity, avoids abstract theory. | Targets cognitive load appropriately. |

| "IGCSE" | Anchors content to a recognized standard. | Links to real-world benchmarks. |

| Three versions | Forces hierarchical thinking (broad → detailed). | Mimics decomposition in problem-solving. |

| "Assignments" | Encourages practical application. | Reinforces step-by-step practice. |

How to Adapt This Prompt

-

For Younger Students (Age 6–8):

*"Create a 6-week intro to computers for 6-year-olds, focusing on touchscreen basics and simple games. Include one hands-on activity per week."* -

For Advanced Students (Age 12+):

*"Design a 12-week Python programming primer for 12-year-olds preparing for IGCSE. Include variables, loops, and a capstone project."* -

For Teacher Training:

*"Generate a professional development workshop for teachers introducing CoT methods in K-12 computer science. Include discussion prompts and a lesson plan template."*

Example Output Using the Prompt

Basic Outline (AI-Generated Snippet)

Unit 3: Hardware vs. Software - Learning Goal: Distinguish physical components from programs. - Activity: Sort flashcards (e.g., "Mouse" → Hardware, "Game" → Software).

Refined Outline (AI-Generated Snippet)

Unit 7: Introduction to Scratch - Subtopics: Interface tour, motion blocks. - CoT Activity: "Plan a dance for your sprite: 1. Choose a sprite. 2. Add ‘move’ and ‘turn’ blocks. 3. Press ‘Run’ and debug if needed."

Complete Version (AI-Generated Snippet)

Week 5: Algorithms - In-Class: Write steps to make a sandwich. - Assignment: "Create an algorithm to feed a pet." - CoT Prompt: "What happens if you swap Step 1 and Step 2?"

Why This Matters

-

Teaches Computational Thinking: The prompt’s structure models how to break down complex topics (like coding) into child-friendly steps.

-

Scalable Framework: Works for any age/subject by adjusting specificity (e.g., swap "Scratch" for "Python").

Pro Tip: Add "Use Chain-of-Thought explanations" to prompts when you want the AI to show its work (e.g., lesson plans, debugging exercises).

This prompt design ensures structured, age-appropriate, and actionable educational content. Would you like to see it applied to another subject (e.g., math or science)?

Bonus: Teaching Computing to Young Learners

For educators preparing 9-year-olds for IGCSE Computer Science, here’s a complete 12-week course outline using hands-on activities and Chain-of-Thought (CoT) principles to simplify complex concepts.

Course Title:

"Computing Foundations for Young IGCSE Learners (Age 9)"

Duration: 12 weeks (1 session/week, 60–75 mins/session)

Course Goals:

By the end, students will:

-

Understand computer hardware/software basics.

-

Learn algorithmic thinking via Scratch programming.

-

Practice digital citizenship and online safety.

Course Outline

Unit 1: What is a Computer?

-

Activity: Label a computer diagram (Monitor, CPU, Keyboard).

-

CoT Prompt: "List the steps to turn on a computer and open a game."

Unit 2: Input & Output Devices

-

Activity: Sort cards into Input (mouse, keyboard) vs. Output (printer, speakers).

-

Assignment: "Draw 3 devices you use daily and classify them."

Unit 5: Introduction to Algorithms

-

CoT Exercise: Write step-by-step instructions to make a sandwich.

-

Debugging Practice: "Find the error in this algorithm: 1. Pour juice. 2. Open cap."

Unit 7-9: Scratch Programming

-

Week 7: Animate a name using motion blocks (e.g.,

move 10 steps). -

Week 9: Build a maze game with

if-thenlogic (e.g., "If sprite touches green, win!").

Unit 12: Final Project

-

Showcase: Students demo Scratch games/animation.

-

CoT Reflection: "Explain how your game works in 3 steps."

Why This Works for Young Learners

-

Scaffolded Learning: Breaks concepts into bite-sized tasks (e.g., algorithms → flowcharts → code).

-

Active Engagement: Games and role-playing (e.g., "pretend to be a router") cement understanding.

-

Real-World Links: Relates abstract ideas (like networks) to home Wi-Fi.

Conclusion: Why CoT Prompting is a Game-Changer

Chain-of-Thought prompting unlocks true reasoning capabilities in AI models by forcing them to decompose problems logically. This leads to:

✅ Higher accuracy in complex tasks.

✅ More interpretable AI decision-making.

✅ Broader applications in education, robotics, and customer support.

Try It Yourself!

Next time you prompt an AI, ask it to "think step-by-step"—you might be surprised by the improvement!